Frank D. (Tony) Smith, Jr. - 2009 - http://www.valdostamuseum.org/hamsmith/

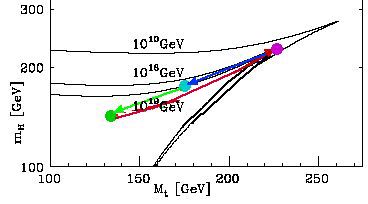

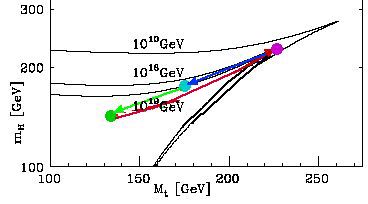

The 3 states of the T-quark-Higgs system are

with the cyan 8-dimensional Kaluza-Klein point being on the Triviality/Unitarity boundary and with

... the top quark condensate proposed by Miransky, Tanabashi and Yamawaki (MTY) and by Nambu independently ... entirely replaces the standard Higgs doublet by a composite one formed by a strongly coupled short range dynamics (four-fermion interaction) which triggers the top quark condensate. The Higgs boson emerges as a tbar-t bound state and hence is deeply connected with the top quark itself. ... MTY introduced explicit four-fermion interactions responsible for the top quark condensate in addition to the standard gauge couplings. Based on the explicit solution of the ladder SD equation, MTY found that even if all the dimensionless four-fermion couplings are of O(1), only the coupling larger than the critical coupling yields non-zero (large) mass ... The model was further formulated in an elegant fashion by Bardeen, Hill and Lindner (BHL) in the SM language, based on the RG equation and the compositenes condition. BHL essentially incorporates 1/Nc sub-leading effects such as those of the composite Higgs loops and ... gauge boson loops which were disregarded by the MTY formulation. We can explicitly see that BHL is in fact equivalent to MTY at 1/Nc-leading order. Such effects turned out to reduce the above MTY value 250 GeV down to 220 GeV ...".

In his book Journeys Beyond the Standard Model ( Perseus Books 1999 ) at pages 175-176, Pierre Ramond says:

"... The Higgs quartic coupling has a complicated scale dependence. It evolves according tod lambda / d t = ( 1 / 16 pi^2 ) beta_lambda where the one loop contribution is given by

beta_lambda = 12 lambda^2 - ... - 4 H ... The value of lambda at low energies is related [to] the physical value of the Higgs mass according to the tree level formula \

m_H = v sqrt( 2 lambda ) while the vacuum value is determined by the Fermi constant ...

for a fixed vacuum value v, let us assume that the Higgs mass and therefore lambda is large. In that case, beta_lambda is dominated by the lambda^2 term, which drives the coupling towards its Landau pole at higher energies.

Hence the higher the Higgs mass, the higher lambda is and the close[r] the Landau pole to experimentally accessible regions. This means that for a given (large) Higgs mass, we expect the standard model to enter a strong coupling regime at relatively low energies, losing in the process our ability to calculate. This does not necessarily mean that the theory is incomplete, only that we can no longer handle it ... it is natural to think that this effect is caused by new strong interactions, and that the Higgs actually is a composite ...

The resulting bound on lambda is sometimes called the triviality bound.

The reason for this unfortunate name (the theory is anything but trivial) stems from lattice studies where the coupling is assumed to be finite everywhere; in that case the coupling is driven to zero, yielding in fact a trivial theory. In the standard model lambda is certainly not zero. ...".

Until the LHC delivers its desired energy and luminosity, the Fermilab Tevatron and its CDF and D0 experiments will provide the most useful data.

In early (1990s) data, both CDF and D0 saw evidence of all 3 states (green, blue, and magenta) in semileptonic data:

In Tommaso Dorigo's blog entry "Proofread my PASCOS 2006

proceedings" (5 September 2007),

particularly comment 11 (by me) and comment 13 (Tommaso's reply to 11):

I said: "... With respect to

the CDF figure ...[left above]... (colored by me with blue for

the peak around 174 GeV ...[and a]... green peak around 140 GeV) ...

...[and]...

the D0 figure ...[right above]... (colored by me with blue for the peak

around 174 GeV ...[and a]... green peak around 140 GeV) ...

... what are the odds of such large fluctuations [ green peaks ]

showing up at the same energy level in two totally independent sets of

data ? ...".

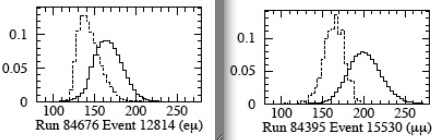

Dilepton events, such as

from the 1997 UC Berkeley PhD thesis of Erich Ward Varnes show

evidence for all 3 states. Event 12814 of Run 84676 seen as a 2-jet

event event (dashed line) shows the 130 GeV T-quark state and seen as

a 3-jet event (solid line) shows the 172 GeV T-quark state, and Event

15530 of Run 84395 seen as a 2-jet event (dashed line) shows the 172

GeV T-quark state and seen as a 3-jet event (solid line) shows the

200+ GeV T-quark state.

In her Universita degli Studi di Siena and University of Illinois

Urbana Ph.D. Thesis, downloadable from Fermilab as

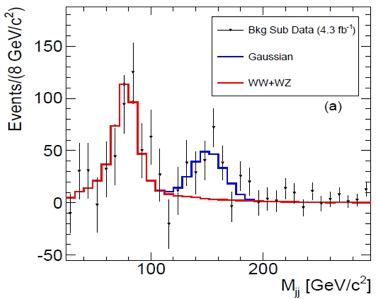

FERMILAB-THESIS-2010-51, Viviana Cavaliere says: "... We present the

measurement of the WW and WZ production cross section in p pbar

collisions at sqrt(s) = 1.96 TeV, in a final state consisting of

an electron or muon, neutrino and jets. ... for the [120; 160] GeV/c2

mass range ... an excess is

observed ... corresponding to a signi cance of 3.3 sigma ...".

Those results are also presented by her Fermilab collaboration CDF in

arXiv 1104.0699 which says:

"... the invariant mass distribution of jet pairs produced in

association with a W boson using data collected with the CDF detector

which correspond to an integrated luminosity of 4.3 fb-1 ...

... has an excess in the 120-160 GeV/c2 mass range which is not

described by current theoretical predictions within the statistical and

systematic uncertainties. ...".

As Tommaso Dorigo said on his blog entry of 6 April 2011: "... This

particle ... cannot be a Higgs boson ...

the CDF analysis ... sought ... "semileptonic" WW or WZ decays ...

these jets are not b-tagged ...

... the data overshoot the backgrounds in the region 120-160 GeV ...

The ... significance ... is 3.2 standard deviations ... a 3-sigma

effect ...".

I think that the 3-sigma effect shown by Viviana Cavaliere and

1104.0699 is confirmation of the 4-sigma effect

seen in older Fermilab semileptonic data.

A fully functioning LHC should shed much light on the T-quark - Higgs system,

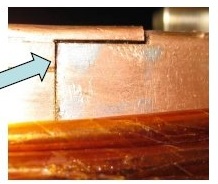

but the LHC has been delayed substantially due to such things as a September 2008 event

and sloppy construction such as failure to solder copper conductor connections

as discussed by J. Wenninger at www.pd.infn.it/planck09/Talks/Wenninger.pdf and by Jester on his Resonaances blog including his 27 May 2009 entry, where Jester said, about the September 2008 event:

"... An abnormally large resistance in one of the magnets acted as a heat source that quenched the superconducting cable at one interconnection. In case of a quench the current should start flowing or a few minutes through the copper bus-bar that encloses the cable until the energy stored in the magnet is removed. However, due to bad soldering of an interconnection the current could not flow normally and an electric arc was created. This melted copper, punctured the helium enclosure which led to spilling of 6 tons of helium into the tunnel. ... a quench (a phase transition from superconductivity to normal conductivity) of an LHC magnet can be induced by just a few milijoules of energy. That energy may be provided by a bunch of strayed protons from the beam . To avoid quenching, LHC cannot lose more than a millionth part of its beam. For comparison, the Tevatron loses about one thousandth of its beam during acceleration. ... the LHC will run at 10 TeV in the center of mass, instead of the nominal 14 TeV. The story goes as follows. Before installing, the LHC magnets have to be "trained", that is to say, to undergo a series of quenches to let their coils settle down at stable positions. After being installed in the tunnel they are supposed to come back to their test performance with no or few quenches. It turns out that the magnets provided by one of the three manufacturing companies need an extraordinary number of quenches to settle down. Although the company in question was not pointed at, everybody knows that the name is Ansaldo. In the case of that company, the number of quenches required for stable operation at 7 TeV per beam is currently unknown, it is probably somewhere between a hundred and a thousand. At the moment it is not clear if the LHC will ever reach 14 TeV; 12-13 TeV might be a more realistic goal. ...".

As I said in a September 2009 comment on Tommaso Dorigo's blog, when I saw pictures of big copper conductors just notched roughly to fit and then laid over each other without even the crudest solder connection (even USA primitive pioneers building log cabins, when they notched the logs and laid them over each other, they made a better connection by "soldering" them with mud or cement), I felt that the people doing the work did not give a damn about whether they did an effective job, they just wanted to "install" the copper as quickly as possible and then collect their contract paycheck, and nobody in management had the interest (or even the curiosity) to go down into the work area to see what was going on (sloppy assembly that would be obvious to the most casual observer - even a stupid bureaucratic observer), so now (December 2009) I worry about how many other bad things are still in there that could impede even running at 10 TeV. I think that CERN should take a much longer delay and look at EVERY sector in DETAIL to be sure it is working properly.To my mind, the cost may be another 6 to 8 months or so of delay but the benefit would be to eliminate some future bad event whose occurrence and correction could result in a delay 2 or 3 times longer (or maybe even something worse). I know that decisions are now made by committee, and that committee decisions tend to be compromises, but engineering is governed by the laws of nature (who never compromises). Compromise is for politicians, who are governed by laws of men (which are full of compromise). In my view, people like Wilson (who built Fermilab) and Rubbia (who ran CERN in a previous era) and Richter (who ran SLAC) and Rickover (who built the USA Nuclear Navy) were primarily engineers, and their machines all worked VERY well. I think that is what CERN now needs.

To me an engineer is somebody who puts things together so that they work and accomplish a specified mission (such as colliders to create collision event and detectors to detect and record the events), and if existing things do not have the necessary properties, the engineer then has to act as inventor to design and build things that do have the necessary properties. About Richter, Sadoulet once said "... Richter had presistently chosen techniques ... which were a little outdated ...", which meant that the techniques were known to actually work (as Panofsky, a former SLAC director, once said "the definition of conventional technology is that it worked once"). Sadoulet was with Rubbia at CERN, building the UA1 detector, so maybe he should share with Rubbia the title of "good engineer". As to how Rubbia valued detectors, he said

"Detectors are really the way you express yourself.

To say somehow what you have in your guts.

In the case of painters, its painting.

In the acse of sculptors, its sculpture.

In the case of experimental physics, it's detectors.

The detector is the image of the guy who designed it."

Rubbia had previously proposed detectors like UA1 at CERN, but they had been rejected by committees, which Rubbia described as being made up of "men of compromise", saying "Give them a proposal, they cut half of it. You can't cut half of this detector, there'd be no detector left." As to Rubbia's attention to details (compare the unsoldered connections of LHC etc), he said:

"If it turns out that the magnet does not work,

or the detector does not track,

we cannot blame anybody but ourselves.

You have to understand every part in your detector.

You have to know what makes it run, how it works."

When there were problems at UA1, Rubbia himself would be the one who understood the problem well enough to dictate a straightforward solution.

As to the CERN theorists, they made fun of the experiment people and made it clear that the pecking order in physics was theorist -> experimenter -> engineer While UA1 was being built, the theorists put on a Christmas play in which the theorists (who seem to have had plenty of spare time to write and perform such plays) put these words in the mouth of an experiment character: "... What is physics coming to? ... It's a mess. I think I'll become an engineer. ... At least they get tea breaks ...".

As to what the pecking order should be, I will just say that there has been zero advance in realistic theoretical physics since the Standard Model (with the exception of my model, of course) while experiments have done a brilliant job of producing tons of data, almost all of which serve to verify the accuracy of the 30-year old Standard Model, if you allow it to have some massive neutrinos, and the experimental machines themselves are (when properly constructed) the pinnacle of human civilization.

(To give credit where credit is due, the quotes and most of the narrative above were quoted or paraphrased from the book Nobel Dreams by Gary Taubes (Random House 1986).)

In that same September 2009 thread of Tommaso Dorigo's blog, Mike Harney said:

"... It just seems like the LHC is in what I call "oscilation mode" - a highly technical term that describes the state of a project when it has lost convergence on becoming a reliable piece of hardware because it is in the process of not fixing root problems but rather doing a patch job which results in releasing a new set of gremlins that are waiting in the wings. As every good "engineer" knows (I put this because anybody can be an engineer after suffering and fixing a few problems), you want to eliminate 90%+ gremlins on the first prototype in order to converge on a solution. This convergence theorem is known to be correct because after decimating so many gremlins on the first prototype, the remaining ones don't stand a chance of victory without the assistance of their brothers in the never-ending shell game that results when you are in "oscillation mode".All of this being said, I can say without a doubt that LHC is definitely in "oscillation mode" and as somebody previously put it, they really need to get to the root of the problems and not settle for the top-down approach to project management but rather visit the welders on site and let them know their performance is being monitored (and blogged about!). ..".

However, whenever the LHC gets fixed and up and running, theHiggs search program it should undertake has been described in an October 2006 article by Andy Parker in PhysicsWeb at http://physicsweb.org/articles/world/19/10/3, which article said in part ( including some material from a referenced article at http://physicsweb.org/articles/world/19/10/3/1/PWexp4%5F10%2D06 ):

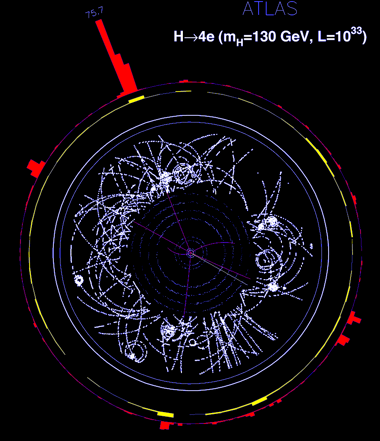

"... One of the main goals of the LHC is to discover the elusive Higgs boson, which is responsible for the masses of all other particles ... The huge general-purpose ATLAS and CMS detectors have been designed to make the hunt for the Higgs boson as easy as possible ... Sometime in 2008, the first dispatches will arrive from beyond the frontier ...... Both CMS and ATLAS are laid out in cylindrical layers of subdetectors but they use markedly different technology. For example, ATLAS is surrounded by eight toroidal magnets, while CMS

uses a single huge solenoid .. and different materials are used in the calorimeters ...

the huge ATLAS detector ...

... weighing some 7000 tonnes and measuring over 26 m in length and 20 m in diameter ... consists of a number of subdetectors, designed to identify and measure different types of particle ... Three main subsystems, are arranged in cylindrical layers around the vacuum pipe that carries the proton beams:

- the inner detector is devoted to measuring charged-particle tracks;

- the calorimeters measure the energy of particles;

- and the outermost subsystem is used to detect muons ...

At the LHC, bunches of some 10^11 protons at a time will be accelerated to 7 TeV. As the protons approach the detectors, they will be focused by electromagnetic fields to increase the chance of a collision. About 20 protons will collide each time the beams cross, most being glancing blows. But occasionally, two quarks in the incoming protons will meet head on, creating a "hard" collision in which the energy released can create new massive particles, including the Higgs boson and sparticles, if they exist. ...

Storing all the data read out from each of the billion or so collisions every second would be impossible, so the detectors themselves will be programmed to select only the most interesting events. Dedicated electronic "trigger" systems will inspect the data for high-energy deposits or interesting patterns of particles, accepting only about 0.25% of the events. Farms of powerful computers will be used to further process the surviving events, reducing the number passed onto waiting physicists for analysis to a more manageable 100 events per second.

Even after this massive filtering exercise, over a petabyte (10^15 bytes ...) of data will be recorded each year. Small teams of physicists will work together on their chosen topic, trying to pick out interesting "signal" events such as Higgs decays while removing "background" events that come from known Standard Model processes. In order to do this, we need to understand what the detectors should see from the Standard Model alone, and what the distinctive "signatures" of new particles will be. This is done using "Monte Carlo simulations": computer programs that generate millions of random events obeying the underlying theoretical equations, starting from the hard collision and simulating the paths and decays of all the particles created by it.

These simulations also include a virtual model of the detector, which mimics the response of each detector element as the particles pass through it. The simulated data from this virtual detector are then fed into the same reconstruction program that physicists will use to inspect the real data, allowing them to compare the real data directly with the predictions of different theoretical scenarios. Such Monte Carlo studies have been carried out since 1984, when the case was first made to fund the LHC, and today the ATLAS database contains simulations of over 600 different scenarios. ...

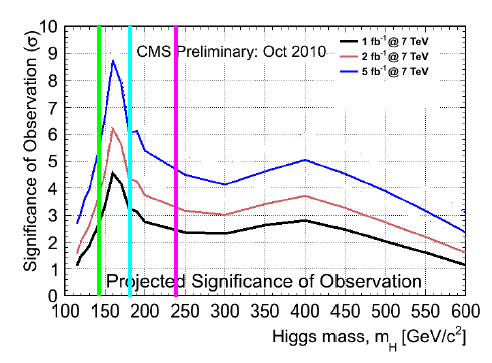

Using the design luminosity of the LHC and a Higgs mass of 250 GeV, we would expect to produce about 10 events containing Higgs bosons every minute. This is a promising start, especially as the detector is designed to run for up to 10 years. ...

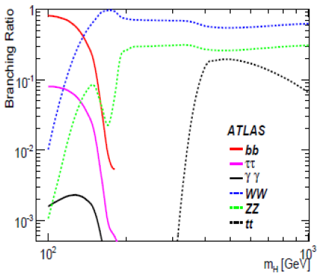

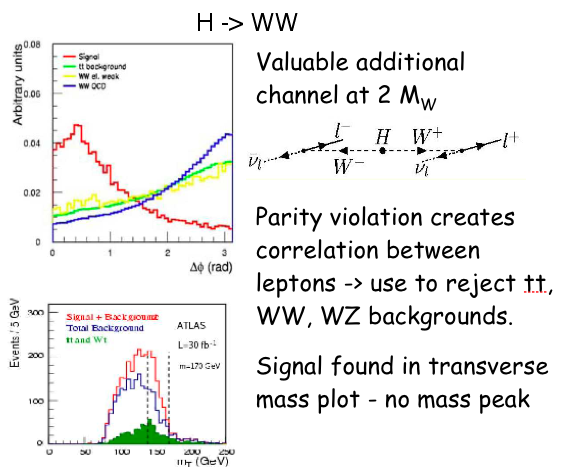

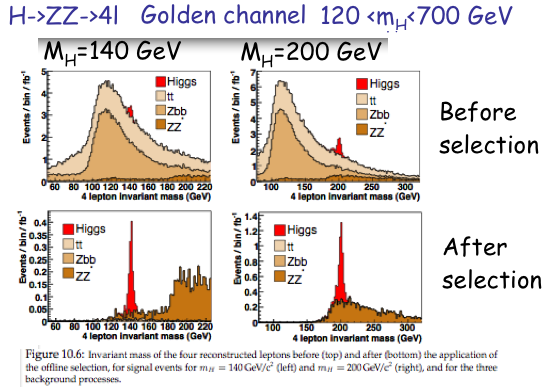

The Higgs boson can decay in many different ways, with the probability of a particular type of decay depending on the as-yet unknown mass of the Higgs. From previous experiments, we know that the Higgs mass must be above 114 GeV/c2 - otherwise we would already have seen it - while theory requires it to lie below 1 TeV/c2.

If it has a "low" mass (about 120 GeV/c2), it will most often decay into a bottom quark and antiquark. Unfortunately, it is very hard to tell these apart from the quarks produced in collisions in which no Higgs is present. A low-mass Higgs can also decay into a pair of photons, but it does this much less often - only once in 1000 decays.

However, this decay is much easier to pick out, and there are so many proton-proton collisions that there will still be thousands of these events produced.

If the Higgs has a high... mass (above 180 GeV/c2), then it is most likely to decay into pairs of W and Z bosons. The easiest signal to identify occurs when the Higgs decays into two Z bosons, which each decay in turn into a pair of electrons or muons. This signature of four leptons can be easily identified by the detector, and is not often produced by unknown Standard Model processes. Furthermore, by measuring the momentum of each of the leptons, it is possible to reconstruct the mass of the parent particle of each pair. Hence, particle physicists will home in on this type of decay by looking for two pairs of leptons in an event, the momenta of each of which "add up" to a value close to the known mass of the Z boson. Taking this idea further, the momenta of the two Z bosons can then be used to derive the Higgs mass. ...".

Note also that for a Higgs to decay at a high rate into a Tquark

and a Tbar antiquark, the Higgs mass would have to be at least twice

the Tquark mass, or 2x130 = 260 GeV for the lightest Tquark state in

my model, or 2x175 = 350 GeV for the 175 GeV Triviality Tquark 8-dim

Kaluza-Klein state that is recognized by Fermilab analysts, or 2x218

= 436 GeV for the 218 GeV Critical Point BHL state, and none of the

three Higgs states in my model ( 146 GeV, 188 GeV, and 239 GeV ) are

heavy enough for such decay into a T-Tbar quark-antiquark

pair.

What should the LHC do after

that ?

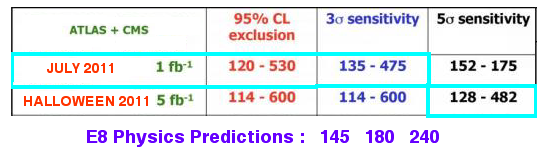

If the LHC validates by E8 Physics

model

or otherwise observes only Standard Model Higgs below 300 GeV with no

indication

of any supersymmetry or other new physics,

then

the energy frontier to be explored will be the energy range

above electroweak symmetry breaking (order of 1 TeV).

In that region the Higgs mechanism

will not be around to generate mass,

so everything will be massless,

which is an interesting new regime that needs to be explored because:

1 – The fermions of different generations may look a lot alike.

For leptons, massless electron, muon, and tauon may be hard to

distinguish.

For quarks being all massless, Kobayashi-Maskawa matrix may look very

different,

with possible consequences for CP violation.

2 – Massive neutrinos may lose their mass,

and so neutrino oscillation phenomena may change in interesting ways.

3 – With no massive stuff, Conformal Symmetry may become important,

leading to phenomena such as:

The LHC upgraded to 14 TeV could

explore the range from 1 TeV up to 14 TeV,

which is substantially beyond its present 7 TeV,

and

if the LHC by Halloween 2011 validates by E8 Physics model

or otherwise observes

only Standard Model Higgs below 300 GeV with no indication of any

supersymmetry

or other new physics,

then

the best use of the LHC may be to search the massless regime as high as

possible,

which would require upgrading LHC to 14 TeV

which could most expeditiously be done by using 2012 for the upgrade

and simultaneously for formulating experiments to test the massless

regime.

The massless regime is a new unknown

frontier, so that any money spent on a 2012 upgrade

would not only make the LHC more stable but would get the LHC up to 14

TeV much earlier,

enabling exploration that might produce a high return of significant

now-unknown useful physics.