Updates for March 2006

According to a Gerald

Massey web page:

"... ......In later life Massey became increasingly

interested in Egyptology. He studied the extensive Egyptian

records housed in the British Museum, eventually teaching himself

to decipher the hieroglyphics. Following years of

diligent research into the history of Egyptian civilisation and

the origins of religion, Massey concluded that Christianity

was neither original nor unique, but that the roots of much of

the Judeo/Christian tradition lay in the prevailing Kamite

(ancient Egyptian) culture of the region. ...".

Gerald Massey (1828-1907) wrote:

- A Book of Beginnings (2 vol) 1881 (reprinted by Black Classic

Press)

- The Natural Genesis (2 vol) 1883 (reprinted by Black Classic

Press)

- Ancient Egypt, The Light of the World (2 vol) (1907)

(reprinted by Black Classic Press)

In an introduction to A Book of Beginnings and to The Natural

Genesis, Charles Finch said:

"... Gerald Massey sought to unravel the psychocultural

strands emanating from the remotest human heritage. ... His

investigations convinced him that the roots of modern culture went

back ... to the ancient beginnings in Africa. ... Massey took the

position that people and culture migrated out of Africa into the

rest of the world ... and that there exists a global cultural

unity that is African at its root. Molecular biology is

bearing Massey out. ...

In Volume II of A Book of Beginnings ... he minutely dissects

the religion and culture of the ancient Hebrews to reveal them as

... an off-shoot of old Kemit and, even more remotely ... of

Africa itself. ...

Josephus ... in his essay Against Apion paraphrases the

Egyptian annalists' explanation of the Exodus, indicating that the

people who so departed Egypt were themselves Egyptian ...

In The Natural Genesis ... Massey ... delved ... into the

source of symbols. ... Massey was keen to trace the manner in

which symbols arose from images taken from the surrounding

topography of nature in the inner African cradleland, or

"placentaland", ( Ta-Kenset ) ... time-space itself was configured

as the uroboric serpent that forms the circle of eterniity be

taking its tail in its mouth. ...

... it was Massey's contention that revelation and prophecy

consisted solely in knowing the major celestial time cycles ...

the Precession of the Eqinoxes ... ramifying into a Great Year of

26,000 years ... Massey realized ... that the ancients had written

a Book ages anterior to the beginning of conventional history, and

that Book was inscribed in the heavens. ...

Jesus, or ... Yehusua's surname "Pandera" meant "panther" and

... the panther-skin was the emblem of the Afro-Kamite priesthood

...".

In an Appendix to Ancient Egypt, The Light of the World, Massey

compared some Egyptian and Christian terms. Here is only a small

sample of an extensive detailed list of correspondences:

"... Egyptian Christian ...

Ra, the holy spirit = God the Holy Ghost ...

... the hawk or the dove as = the dove as the bird of the

the bird of the holy spirit Holy Spirit ...

The trinity of = The Trinity of the Father,

Atum(or Osiris) the father, Son, and Holy Spirit ...

Horus (or Iu) the son, and

Ra the holy spirit

Isis, the virgin mother = Mary the virgin mother of Jesus ...

of Iu, her Su or son

The outcast great mother = Mary Magdalene with

with her seven sons her seven devils ...

Seb, Isis, and Horus, = Joseph, Mary, and Jesus,

the Kamite holy trinity a Christian holy trinity ...

Sut and Horus, = Satan and Jesus,

the twin opponents the twin opponents ...

Anup, the Baptizer = John the Baptist ...

The star, as announcer = The Star in the East that indicated

for the Child-Horus the birthplace of Jesus ...

Hermes, the scribe = Hermas, the scribe ...

The paradise of the = The Holy City lighted by one

pole-star one luminary that is neither the

sun nor the moon = the pole-star ...

The ark of Osiris-Ra = The Ark of the New Covenant

in heaven ...".

In his books, Gerald Massey had similar detailed lists of

correspondences between Egyptian and

- English

- Hebrew

- Akkado-Assyrian

- Maori

- Various African Dialects

- Namaqua Hottentot

- Makua

- Sanskrit

A recent book along the lines of Gerald Massey's work is Black

Spark, White Fire : Did African Explorers Civilize Ancient

Europe?, (Prima Publishing 1997) by Richard Poe, who quotes

Diodorus as saying

"... Now the Ethiopians, as historians relate,

were the first of all men. They say also that the Egyptians are

colonists sent out by the Ethiopians ...".

Martin Bernal wrote Black Athena: The Afroasiatic Roots

of Classical Civilization (2 volumes, Rutgers 1987, 1991). His

father was J. D. Bernal who in 1929 wrote in "The

World, the Flesh, and the Devil"

"... The stage should soon be reached when materials can

be produced which are not merely modifications of what nature has

given us in the way of stones, metals, woods and fibers, but are

made to specifications of a molecular architecture. ...

Normal man is an evolutionary dead end; mechanical man,

apparently a break in organic evolution, is actually more in the

true tradition of a further evolution. ... man himself must

actively interfere in his own making ... The decisive step will

come when we extend the foreign body into the actual structure of

living matter ...

Connections between two or more minds would tend to become a

more and more permanent condition until they functioned as a dual

or multiple organism. ... The complex minds could ... extend

their perceptions and understanding and their actions far beyond

those of the individual. Time senses could be altered: the events

that moved with the slowness of geological ages would be

apprehended as movement, and at the same time the most rapid

vibrations of the physical world could be separated. ... The

interior of the earth and the stars, the inmost cells of living

things themselves, would be open to consciousness ... and ... the

motions of stars and living things could be directed. ...

consciousness itself may ... become completely

etherealized ... becoming masses of atoms in space

communicating by radiation, and ultimately perhaps resolving

itself entirely into light. That may be an end or a

beginning ...

... leaving on one side the not impossible state in which

mankind would be stabilized and live an oscillating existence

for millennia, we have to consider ... the alternatives:

whether mankind will progress as a whole or will divide

definitely into a progressive and an unprogressive part.

...

More and more, the world may be run by the scientific expert.

The new nations, America, China and Russia, have

begun to adapt to this idea consciously. ... this scientific

development could take place by the colonization of the universe

and the mechanization of the human body. ... there would be an

effective bar between the altered and the non-altered humanity ...

If ... the colonization of space will have taken place ... Mankind

- the old mankind - would be left in undisputed possession of the

earth, to be regarded by the inhabitants of the celestial spheres

with a curious reverence. ...

We are on the point of being able to see the effects of our

actions and their probable consequences in the future; we hold the

future still timidly, but perceive it for the first time, as a

function of our own action. ...".

and as Jack

Sarfatti says, J. D. Bernal "... was the Founder of Birkbeck

College situated on Malet Street behind the venerable British Museum

..." where Gerald Massey studied.

In response to criticism of Black Athena, Martin Bernal wrote

Black Athena Writes Back (Duke 2001), in which he said:

"... I [Martin Bernal] have never claimed that my

ideas are original, merely that I am reviving some neglected older

views and bringing together some scattered contemporary ones ...

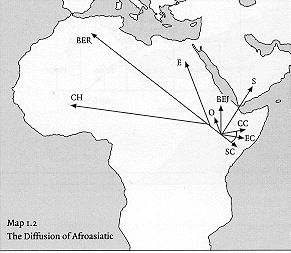

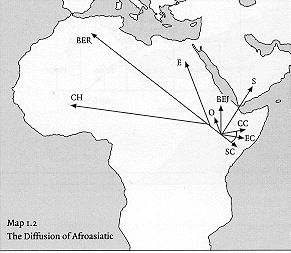

[ CH = Chadic, BER = Berber, E = Egyptian,

O = Omotic, BEJ = Beja, S = Semitic, CC = Central Cushitic, EC =

East Cushitic, SC = South Cushitic ]

... By far the most important single reaction to Black Athena

has been the publication of ... Black Athena Revisited

...[whose]... senior editor [was] Mary Lefkowitz

...[and who wrote a]... popular book Not Out of Africa

[ How Afrocentrism Became an Excuse to Teach Myth As History

]...

In 1991, when Lefkowitz first encountered Afrocentrism through

reading Black Athena, she was appalled. She discovered that there

were people writing books and teaching that Greek civilization had

derived from ... Egypt. ... Lefkowitz's dislike is focused on

those who have argued that African Americans share a common

heritage with Ancient Egypt and further, that through Egypt,

Africa played a significant role in the formation of Ancient

Greece and hence "Western Civilization". ... It is this view of

Greek hybridity and dependence on older, non-European

civilizations that Lefkowitz finds disturbing. ...".

I find it unsettling that, according to a

Publishers Weekly review of Not Out of Africa (on

an Amazon web page), Lefkowitz says

that her attacks on Black Athena are "... defending academic

standards ...", a circumstance that reminds me of opposition to new

ideas, censorship, the deaths of Socrates and Giordano Bruno, and

Gerald Massey's last

poem:

For Truth

He set his battle in array, and thought

To carry all before him, since he fought

For Truth, whose likeness was to him revealed;

Whose claim he blazoned on his battle-shield;

But found in front, impassively opposed,

The World against him, with its ranks all closed:

He fought, he fell, he failed to win the day

But led to Victory another way.

For Truth, it seemed, in very person came

And took his hand, and they two in one flame

Of dawn, directly through the darkness passed;

Her breath far mightier than the battle-blast.

And here and there men caught a glimpse of grace,

A moment's flash of her immortal face,

And turned to follow, till the battle-ground

Transformed with foemen slowly facing round

To fight for Truth, so lately held accursed,

As if they had been Her champion from the first.

Only a change of front, and he who had led

Was left behind with Her forgotten dead.

In 1995, Di Nella and Paturel at Lyon observed in astro-ph/9501015

that:

"The distribution of galaxies up to a distance of 200 Mpc

(650 million light-years) is flat and shows a structure like a

shell roughly centered on the Local Supercluster (Virgo cluster).

This result clearly confirms the existence of the hypergalactic

large scale structure noted in 1988."

Back in 2003, in astro-ph/0302496,

Tegmark, de Oliveira-Costa, and Hamilton said:

"... there is a preferred axis in space along which the

quadrupole has almost no power. This axis is roughly the line

connecting us with (l, b) = (-80, 60) in Virgo. ... Moreover,

this [octopole] axis is seen to be approximately

aligned with that for the quadrupole. ... In contrast, the

hexadecapole is seen to exhibit the more generic behavior we

expect of an isotropic random field, with no obvious preferred

axis. ...".

Update to 2006:

Cosmology News said:

"... 4 March 2003 A new study shows that the

(weak) large-scale temperature fluctuations in the microwave

background are perpendicular to a spatial axis pointing towards

Virgo. This tentatively suggests that if the universe is indeed

multiconnected, it connects up with itself in that direction. In

other words, if you want to travel through space in a straight

line and return to your starting point, Virgo is the most

promising direction to head.

8 October 2003 An expository article and a research

article in the 9 October 2003 issue of Nature show that the

Poincaré dodecahedral space may account for the weak

large-scale temperature fluctuations in the microwave background.

The Poincaré dodecahedral space is like a 3-torus, but made

from a regular dodecahedron instead of a cube. Another crucial

difference is that the dodecahedral model implies that space is

slightly curved, unlike the 3-torus which is flat like Euclidean

space. Confirmation or refutation of the proposed model is

expected soon, within weeks or months. Links will be provided here

as more information becomes available.

April 2004 The second-year WMAP data, expected by

February of this year, has been delayed due to some "surprises" in

the data. The nature of the surprises is being kept secret while

the WMAP team studies them in hopes of understanding their

significance. The surprises may well be related to some anomalies

discovered in the first-year data, such as statistically

significant differences in the CMB between the northern and

southern galactic hemispheres, and unsettling coincidences between

the directions of the largest scale fluctuations and the plane of

the solar system (the ecliptic). The latter suggest that the

largest scale fluctuations on the microwave sky might not be

coming from deep space after all, but rather from sources in or

around the solar system, or could perhaps even be due to some

still undiscovered error in the data analysis. Of course such

speculation should be taken with a grain of salt until the WMAP

team releases the second-year data along with their analysis of

it.

February 2006 Careful analysis has shown that the

strange alignment of the broadest Cosmic Microwave Background

(CMB) fluctuations is real and significant at the 99% level.

The alignment, together with the extreme weakness of those broad

fluctuations, presents a real mystery whose resolution is not yet

in sight. On the other hand, alignments with the ecliptic

turned out to be spurious. The long-awaited second-, third-

and fourth-year WMAP data, including the polarization data, still

have not appeared. The WMAP team is analyzing these data with

utmost care, leading one to speculate that exciting conclusions

may follow. ...".

March 2006 - Sean Carroll, in a

16 March 2006 CosmicVariance blog entry, said:

"... the new

WMAP results ... I can quickly summarize the major points as I

see them. ...

- ... the power spectrum: amount of anisotropy as a function

of ... multipole moment l ... The major difference between this

and the first-year release is that several points that used to

not really fit the theoretical curve are now, with more data

and better analysis, in excellent agreement with the

predictions of the conventional LambdaCDM model. That's a

universe that is spatially flat and made of baryons, cold dark

matter, and dark energy.

- In particular, the octopole moment (l=3) is now in much

better agreement than it used to be. The quadrupole moment

(l=2), which is the largest scale on which you can make an

observation (since a dipole anisotropy is inextricably mixed up

with the Doppler effect from our motion through space), is

still anomalously low.

- The best-fit universe has approximately 4% baryons, 22%

dark matter, and 74% dark energy, once you combine WMAP with

data from other sources. The matter density is a tiny bit low,

although including other data from weak lensing surveys brings

it up closer to 30% total. ...

- Perhaps the most intriguing result is that the scalar

spectral index n is 0.95 +- 0.02. This tells you the amplitude

of fluctuations as a function of scale; if n=1, the amplitude

is the same on all scales. Slightly less than one means that

there is slightly less power on smaller scales. The reason why

this is intriguing is that, according to inflation, it's quite

likely that n is not exactly 1. Although we don't have any

strong competitors to inflation as a theory of initial

conditions, the successful predictions of inflation have to

date been somewhat "vanilla" - a flat universe, a flat

perturbation spectrum. This expected deviation from perfect

scale-free behavior is exactly what you would expect if

inflation were true. The statistical significance isn't what it

could be quite yet, but it's an encouraging sign.

- ... lower power on small scales (as implied by n<1)

helps explain some of the problems with galaxies on small

scales. If the primordial power is less, you expect fewer

satellites and lower concentrations, which is what we actually

observe. ...

- The dark energy equation-of-state parameter w is a tiny bit

greater than -1 with WMAP alone, but almost exactly -1 when

other data are included. ...

- One interesting result from the 1st-year data is that

reionization - in which hydrogen becomes ionized when the first

stars in the universe light up - was early, and the

corresponding optical depth was large. It looks like this

effect has lessened in the new data ...

- A lot of work went into understanding the polarization

signals, which are dominated by stuff in our galaxy. WMAP

detects polarization from the CMB itself, but so far it's the

kind you would expect to see being induced by the perturbations

in density. There is another kind of polarization ("B-mode"

rather than "E-mode") which would be induced by gravitational

waves produced by inflation. This signal is not yet seen, but

it's not really a suprise; the B-mode polarization is expected

to be very small, and a lot of effort is going into designing

clever new experiments that may someday detect it. In the

meantime, WMAP puts some limits on how big the B-modes can

possibly be, which do provide some constraints on inflationary

models. ...".

The WMAP

3-year Polarization paper says:

"... there is a residual signal in our power

spectra that we do not yet understand. It is evident in W band

in EE at l = 7 and to a lesser degree at l = 5 and l = 9.

We see no clear evidence of it anywhere else. ... The W-band

EE l = 7 value is essentially unchanged by cleaning, removing a

10 degree radius around the Galactic caps, or by additionally

masking ±10 degree in the ecliptic plane. ... To avoid

biasing the result by this residual artifact which also

possibly masks some unmodeled dust and synchrotron

contamination, we limit the cosmological analysis to the QV

combination. ...

We detect the optical depth with tau = 0.088 + 0.028

- 0.034 ...

The same free electrons from reionization that lead to the l

< 10 EE signal act as test particles that scatter the

quadrupolar temperature anisotropy produced by gravitational

waves (tensor modes) originating at the birth of the universe.

The scatter results in polarization B modes. ... While scalar

and tensor fluctuations both contribute to the TT and EE

spectra, only tensors produce B modes ... The tensor

contribution is quantified with the tensor to scalar ratio r

... Using primarily the TT spectrum, along with the optical

depth established with the TE and EE spectra, the tensor to

scalar ratio is limited to r < 0.55 (95% CL). When the

large scale structure power spectrum is added to the mix ...

the limit tightens to r < 0.28 (95% CL). These values are

approaching the predictions of the simplest inflation

models. ...

The detection of the TE anticorrelation near l = 30

is a fundamental measurement of the physics of the formation of

cosmological perturbations ... It requires some mechanism

like inflation to produce and shows that superhorizon

fluctuations must exist. ...".

The WMAP

3-year Temperature paper says:

"... The new polarization data ... produce a better

measurement of the optical depth to re-ionization, tau =

0.088 + 0.028 - 0.034. This new and tighter constraint on

tau helps break a degeneracy with the scalar spectral index

which is now found to be ns = 0.95 ± 0.02. ...

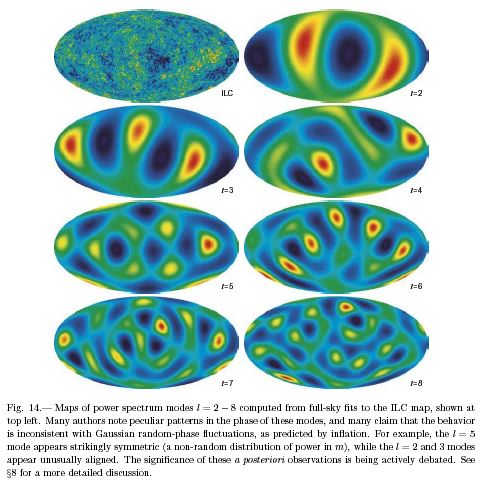

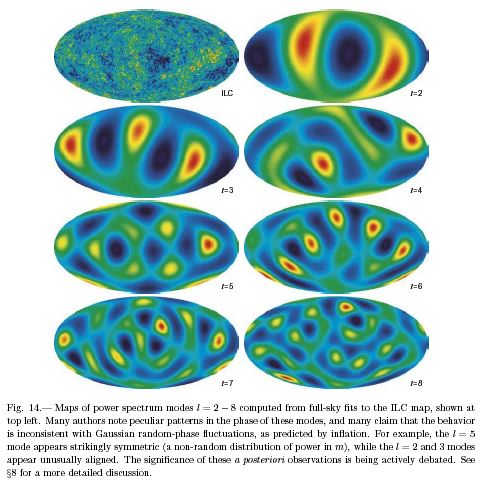

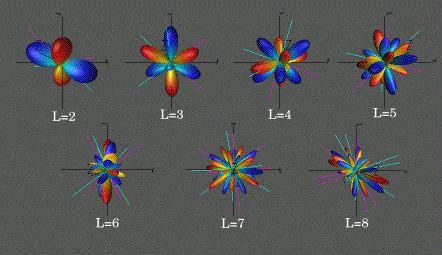

Sky maps of the modes from l = 2 - 8, derived from the ILC

map, are shown in Figure 14. ...

... There has been considerable comment on the non-random

appearance of these modes. ... It has been noted by several

authors that the orientation of the quadrupole and octopole

are closely aligned ... and that the distribution of power

amongst the a_lm coefficients is possibly non-random. ... the

basic structure of the low l modes is largely unchanged from

the first-year data. Thus we expect that most, if not all, of

the "odd" features claimed to exist in the first-year maps will

survive. ... the quadrupole amplitude is indeed low, but not

low enough to rule out /\CDM. ...

there do appear to be some questionable features in the data

... These features include:

- low power, especially in the quadrupole moment;

- alignment of modes, particularly along an "axis of evil"

...

- the quadrupole and octupole phases are notably

aligned with each other and ...

- the octupole is unusually "planar" with most of its

power aligned approximately with the Galactic plane

...

- the quadrupole plane and the three octopole planes

[are] "remarkably aligned."...

- three of these planes are orthogonal to the ecliptic

and the normals to these planes are aligned with the

direction of the cosmological dipole and with the

equinoxes. This had led to speculation that the low-l

signal is not cosmological in origin ...

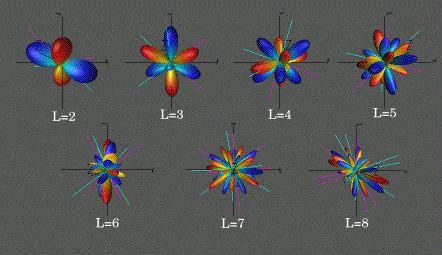

- "multipole vectors" ... characterize the geometry of

the l modes ...[showing]... that the "oriented

area of planes defined by these vectors . . . is

inconsistent with the isotropic Gaussian hypothesis at

the 99.4% level for the ILC map." ...

[ "multipole vectors" are described by Copi, Huterer, and

Starkman on a

cwru web page with an illustration on which the following image

is based:

]

- the l = 5 mode is "spherically symmetric" at 3 sigma,

and the l = 6 mode is planar at 2 sigma confidence

...

- l = 3 and 5 modes are aligned in both direction and

azimuth ...

- unequal fluctuation power in the northern and southern

sky ... the ratio of low-l power between two hemispheres

...[shows]... that only 0.3% of simulated skies have

as low a ratio as observed ...

- a surprisingly low three-point correlation function in

the northern sky;

- an unusually deep/large cold spot in the southern sky;

and

- various "ringing" features, "glitches", and/or "bites"

in the power spectrum. ...

the most scientifically compelling development would be

the introduction of a new model that explains a number of

currently disparate phenomena in cosmology (such as the

behavior of the low l modes and the nature of the dark

energy) while also making testable predictions of new

phenomena. ...".

The WMAP

3-year parameter paper says:

"... r and ns are defined at k = 0.002 Mpc-1.

... The WMAP data requires either tensor modes or a spectral

index with ns < 1 to fit the angular power spectrum. ...

The low l multipoles, particularly l = 2, are lower than

predicted in the /\CDM model. ... Models with

significant gravitational wave contributions, r = 0.3, make

a ... prediction ...[of] ... a modified temperature

spectrum with more power at low multipoles ...

The deviation of the primordial power spectrum from a

simple power law can be most simply characterized by a sharp

cut-off in the primordial spectrum. Analysis of this model

finds that putting in a cut off of k_c = 3 x 10^(-4) /

Mpc improves the fit ...

the simplest inflationary models predict only mild

non-Gaussianities that should be undetectable in the WMAP data

... If the universe were finite and had a size comparable to

horizon size today, then the CMB fluctuations would be

non-Gaussian ... Since the release of the WMAP data,

several groups have claimed detections of significant

non-Gaussianities ... Almost all of these claims imply that

the CMB fluctuations are not stationary and claim a

preferred direction or orientation in the data. ... we

choose to address in a unifying manner the large scale

"asymmetry", "alignment" and low l power issues discussed in

the literature after the first year release ... by testing the

hypothesis that the observed temperature fluctuations, Tbar,

can be described as a Gaussian and isotropic random field

modulated on large scales by an arbitrary function ... mild

deviations ... are observed ... the best fit form for f

...[is]... an axis lying near the ecliptic

plane. This is the same feature that has been identified in

a number of papers on non-Gaussianity. ... If we were eager to

claim evidence of strong non-Gaussianity, we could quote the

probability of this occurring randomly as less than 2%. We,

however, do not interpret the improvement ... as evidence

against the hypothesis that the primordial fluctuations are

Gaussian. Since the existence of non-Gaussian features in

the CMB would require dramatic reinterpretation of our theories

of primordial fluctuations, more compelling evidence is

required. ... an alternative model that better fits the low

l data would be an exciting development. ...".

My opinion from 2003 remains unchanged. It is that dipole (line

segment) configurations

*---*

are naturally related to spatial axes in 3-dimensional space, as

are quadrupole (square) and octopole (cube) configurations

*---* *---*

| | |\ |\

*---* *-*-*-*

\| \ |

*---*

However, since higher multipoles, from hexadecapole on up, are

related to 4-dimensional and higher dimensional hypercubes, they do

not have such a direct relationship to spatial axes in 3-dimensional

space. It seems to me that the dipole, the quadrupole and the

octopole are all aligned with respect to the same axis, which

corresponds to the Great Attractor in Virgo. Therefore, I thought in

2003, and I still think, that the dipole, quadrupole and octopole are

all related to the Great Attractor in Virgo, and that

"an alternative model that better fits the

low l data" and "a new model that explains ... the behavior of the

low l modes and the nature of the dark energy"

could be the Segal conformal gravity sector of

my D4-D5-E6-E7-E8 VoDou Physics Model, because:

- Dark Energy

comes from the special conformal and Lorentz generators of the

conformal group Spin(2,4) =

SU(2,2), and

- the line-of-sight through the Great

Attractor in Virgo goes through an unusually large proportion of

Minkowski-phase

"islands" floating in the Conformal-phase Dark Energy

"sea".

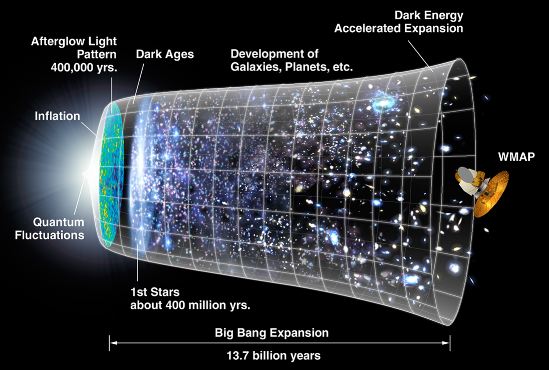

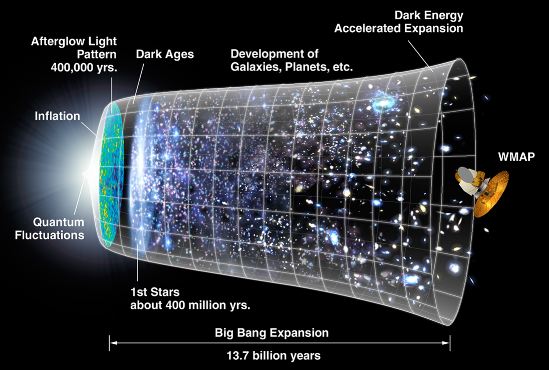

A WMAP web

page has a nice illustration from the NASA/WMAP Science Team

of our expanding universe:

-

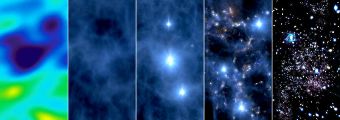

Another WMAP

web page has an illustration from the NASA/WMAP Science

Team

with a caption that says:

- "... Stars A sequence from a movie called The Universe.

- Frame one depicts temperature fluctuations (shown as

color differences) in the oldest light in the universe, as

seen today by WMAP. Temperature fluctuations arose from the

slight clumping of material in the infant Universe, which

ultimately led to the vast structures of galaxies we see

today.

- Frame two shows matter condensing as gravity pulls

matter from regions of lower density onto regions of higher

density.

- Frame three captures the era of the first stars, about

400 million years after the Big Bang. Gas has condensed and

heated up to temperatures high enough to initiate nuclear

fusion, the engine of the stars.

- Frame four shows more stars turning on. Galaxy chains

forms along those filaments first seen in frame two, a web

of structure.

- Frame five depicts the modern era, billions upon

billions of stars and galaxies... all from the seeds planted

in the infant Universe. ...".

The Cosmological Constant is described from an Algebraic Quantum

Field Theory point of view by Hollands and Wald in

gr-qc/0405082, where they say:

"... there are holistic aspects of quantum field

theory that cannot be properly understood ... by applying ordinary

quantum mechanics to the low energy effective degrees of freedom

of a more fundamental theory defined at

ultra-high-energy/short-wavelength scales ...

the absurdly large value obtained for the stress-energy of a

quantum field when computed by applying quantum mechanics without

subtractions to the low energy modes of the field is usually

referred to as the "cosmological constant problem". In 4

dimensions, a calculation ... would yield an expected energy

density of order ... ( 10^19 GeV)^4 ... By contrast, the

actual energy density of our universe is only of order ... (

10^(-12) GeV)^4 ... the enormous discrepancy between the naive

mode-sum calculation and the observed energy density is therefore

generally viewed as a very serious "problem".

We do not share this view. As we have argued above, there are

many aspects of the theory of a quantum field that simply cannot

be understood by viewing its low energy degrees of freedom as

being independent. The mode sum calculations ... do not properly

take into account the holistic aspects of quantum field theory.

... If one accepts the holistic aspects of quantum field theory,

there is still a "cosmological constant problem", but it is rather

different than the usual formulation of it. The puzzle is not,

"Why is the observed energy density of the universe so small?" ...

Rather, the puzzle is, "Why is the cosmological constant so

large?"

Quantum field theory predicts that the stress-energy tensor

of a free quantum field in an adiabatic vacuum state in a slowly

expanding 4-dimensional universe should be of order of L^(-4),

where L denotes the size and/or radius of curvature of the

universe. For our universe, 1/L would be of order 10^(-42)

GeV. But observations of type Ia supernovae and the cosmic

microwave background strongly suggest that, at the present time,

the dominant component of stress-energy in the universe is

smoothly distributed (i.e., not clustered with galaxies) and has

negative pressure. The energy density of this so-called "dark

energy" is thus (10^(-12) GeV)^4, i.e. roughly the geometric

mean of the unsubtracted mode sum and quantum field theoretic

predictions for vacuum energy density.

... if dark energy does correspond to vacuum energy of an

interacting quantum field, it is our view that its properties will

be understood only by fully taking into account the holistic

nature of quantum field theory.

... Of course, it remains a very significant puzzle as to

why quantum field theory possesses holistic aspects, i.e., how

they arise from the more fundamental, underlying theory.

However, it is likely that we will need a much deeper

understanding of the underlying theory in order to account for

this. ...".

Such a deeper understanding is supplied by my D4-D5-E6-E7-E8

VoDou Physics Model. It is based on a generalized hyperfinite II1

von Neumann algebra factor whose basic building block is the real

Clifford algebra Cl(8). Triality symmetries of Cl(8) give ultraviolet

cancellations leading to a zero value of the vacuum fluctuation

Cosmological Constant in the full high-energy regime of 8-dimensional

spacetime.

These symmetries are inherited by the effective low-energy regime

with 4-dimensional spacetime, so that it also has a zero value of the

vacuum fluctuation Cosmological Constant /\. The non-zero /\ that we

observe now in our universe (and that we measured with WMAP) is due

to the Conformal Gravity of 4-dimensional spacetime based on the

ideas of Irving Segal, which gives calculated values of the ratio

Dark Energy : Dark Matter : Ordinary Matter that are consistent with

WMAP observations.

The Holland andWald paper at

gr-qc/0405082 is discussed in physics/0603112

by Bert Schroer, who says therein:

"... there are two important concepts of localizations in

relativistic quantum theory: the Newton-Wigner localization and

modular localization. ...

the N-W localization results from the adaptation of the Born

x-space localization probability to relativistic wave functions

and connects to a position operator and associated localization

projectors and probabilities ...

The use of N-W localization becomes ... deadly wrong

(superluminal acausalities) if used for propagation over finite

distances.

modular localization results from the attempt to

liberate the causal localization inherent in pointlike quantum

fields from the non-intrinsic aspects of field-coordinatization.

Modular localization theory assigns a preferred role to

operator algebras associated with spatial wedge regions; in

some sense which can be made precise wedge algebras implement the

best compromise between particles and fields. As in the Lagrangian

quantization approach the perturbative construction of a model is

in principle determined once one specified the Lagrangian, the QFT

in the modular localization setting is uniquely determined in

terms of the structure of its wedge algebras (the position of the

wedge algebra within the algebra of all operators, or the

algebraic structure of generators). The algebras for smaller

regions (spacelike cones, double cones) are determined in terms of

algebraic intersections of wedge algebras. ...

the local net of spacetime-indexed operator algebras which ...

quantum fields ... generate ... is analogous to the

coordinate-independent setting achieved in modern differential

geometry. ...

Modular localization ... does not lead to projectors and

probabilities but is correct concept for the covariant causal

localization of states and operator algebras. ... There are

massiveWigner representations in d=1+2 dimensions with anomalous

(non-halfinteger) spin whose associated fields have plektonic

(braid group) statistics which is inconsistent with a pointlike

localization as well with an onshell structure. More precisely

even in the "freest version" (vanishing scattering cross section)

the realization of braid group statistics requires that any

operator whose one-time application to the vacuum is a state with

a one-particle component has necessarily a nonvanishing vacuum

polarization cloud, in other words there is no on-shell free

anyon field.. Many properties of anyons (anyon=abelian

plekton) can be seen by applying modular localization to the

Wigner representation. ...

if vector potentials become quantum objects ... The

standard way... is to temporarily forget the positivity

requirement and to uphold the pointlike structure so that the

usual perturbative Lagrangian quantization approach could be

applied. This is achieved by artificially extending the quantum

theory by adding in unphysical ghosts which at the end of

the calculation have to be removed.

From the outset it is not clear that after having done the

perturbation theory in this unphysical setting one can remove the

ghosts from quantities which in classical sense would be gauge

invariant and the best formulation which makes such a descend

manifest (by formulating the physical descend as a cohomological

problem) is the well known BRST formalism of gauge theory. ... The

problem starts if such a BRST "catalyzer" (Ghosts are neither in

the original problem of spin one particle representations nor in

the final physical answers) is not considered as a temporary

computational trick, but becomes elevated to the status of a

fundamental physical tool. ...

Behind all these remarks is a theory, which after the Hilbert

space operator formulation of quantum mechanics is the most

impressive examples of a perfect matching of mathematical

concepts: the modular (Tomita-Takesaki) theory of operator

algebras and its unifying awe-abiding power to relate

statistical mechanics, quantum field theoretical localization and

the local quantum physical reason d'etre for the emergence of

internal and external symmetries from general properties of

operator algebras. ... Connes used this new concepts to

significantly extend the classification of factor algebras started

by Murray and von Neumann. ...

A particularly radical result in comparison with the standard

Lagrangian setting is the possibility to describe a

full-fledged QFT with all its structural richness in terms of a

finite number of "monades" i.e. copies of one unique object in a

common environment such that all physics is encoded in the

relative positions of these copies. If one interprets the word

monade in this physical realization of Leibniz's philosophy as the

unique (up to isomorphism) hyperfinite type III1 Murray von

Neumann factor ...

[ In my D4-D5-E6-E7-E8 VoDou Physics

Model, the "monade" is a generalized hyperfinite II1 von Neumann

algebra factor whose basic building block is the real Clifford

algebra Cl(8).

John Baez, in his week

175, described the classification of von Neumann algebras:

"... While classifying all *-algebras of operators is an utterly

hopeless task, classifying von Neumann algebras is almost within

reach - close enough to be tantalizing, anyway. Every von Neumann

algebra can be built from so-called "simple" ones as a direct sum, or

more generally a "direct integral", which is a kind of continuous

version of a direct sum. As usual in algebra, the "simple" von

Neumann algebras are defined to be those without any nontrivial

ideals. This turns out to be equivalent to saying that only scalar

multiples of the identity commute with everything in the von Neumann

algebra.

People call simple von Neumann algebras "factors" for short.

Anyway, the point is that we just need to classify the factors: the

process of sticking these together to get the other von Neumann

algebras is not tricky.

The first step in classifying factors was done by von Neumann and

Murray, who divided them into types I, II, and III. This

classification involves the concept of a "trace", which is a

generalization of the usual trace of a matrix.

Here's the definition of a trace on a von Neumann algebra. First,

we say an element of a von Neumann algebra is "nonnegative" if it's

of the form xx* for some element x. The nonnegative elements form a

"cone": they are closed under addition and under multiplication by

nonnegative scalars. Let P be the cone of nonnegative elements. Then

a "trace" is a function tr: P -> [0, +infinity] which is

linear in the obvious sense and satisfies tr(xy) = tr(yx) whenever

both xy and yx are nonnegative.

Note: we allow the trace to be infinite, since the interesting von

Neumann algebras are infinite-dimensional. This is why we define the

trace only on nonnegative elements; otherwise we get "infinity minus

infinity" problems. The same thing shows up in the measure theory,

where we start by integrating nonnegative functions, possibly getting

the answer +infinity, and worry later about other functions.

Indeed, a trace very much like an integral, so we're really

studying a noncommutative version of the theory of integration. On

the other hand, in the matrix case, the trace of a projection

operator is just the dimension of the space it's the projection onto.

We can define a "projection" in any von Neumann algebra to be an

operator with p* = p and p2 = p. If we study the trace of such a

thing, we're studying a generalization of the concept of dimension.

It turns out this can be infinite, or even nonintegral!

We say a factor is type I if it admits a nonzero trace for

which the trace of a projection lies in the set

{0,1,2,...,+infinity}. We say it's type In if we can normalize

the trace so we get the values {0,1,...,n}. Otherwise, we say

it's type Iinfinity, and we can normalize the trace to get all the

values {0,1,2,...,+infinity}. It turn out that every type In

factor is isomorphic to the algebra of n x n matrices. Also,

every type Iinfinity factor is isomorphic to the algebra of all

bounded operators on a Hilbert space of countably infinite dimension.

Type I factors are the algebras of observables that we learn to

love in quantum mechanics. So, the real achievement of von

Neumann was to begin exploring the other factors, which turned out

to be important in quantum field theory.

We say a factor is type II1 if it admits a trace whose values

on projections are all the numbers in the unit interval

[0,1]. We say it is type IIinfinity if it admits a

trace whose value on projections is everything in

[0,+infinity]. Playing with type II factors amounts to

letting dimension be a continuous rather than discrete parameter!

Weird as this seems, it's easy to construct a type II1 factor. Start

with the algebra of 1 x 1 matrices, and stuff it into the algebra of

2 x 2 matrices as follows:

( x 0 )

x |-> ( )

( 0 x )

This doubles the trace, so define a new trace on the algebra of 2

x 2 matrices which is half the usual one. Now keep doing this,

doubling the dimension each time, using the above formula to define

a map from the 2n x 2n matrices into the 2n+1 x 2n+1 matrices,

and normalizing the trace on each of these matrix algebras so that

all the maps are trace-preserving. Then take the union of all

these algebras... and finally, with a little work, complete

this and get a von Neumann algebra! One can show this von Neumann

algebra is a factor. It's pretty obvious that the trace of a

projection can be any fraction in the interval [0,1] whose

denominator is a power of two. But actually, any number from 0 to 1

is the trace of some projection in this algebra - so we've got our

paws on a type II1 factor. This isn't the only II1 factor, but

it's the only one that contains a sequence of finite-dimensional

von Neumann algebras whose union is dense in the weak topology.

A von Neumann algebra like that is called "hyperfinite", so

this guy is called "the hyperfinite II1 factor". It may sound like

something out of bad science fiction, but the hyperfinite II1 factor

shows up all over the place in physics! First of all, the algebra of

2n x 2n matrices is a Clifford algebra, so the hyperfinite II1

factor is a kind of infinite-dimensional Clifford algebra. But

the Clifford algebra of 2n x 2n matrices is secretly just another

name for the algebra generated by creation and annihilation operators

on the fermionic Fock space over C2n. Pondering this a bit, you can

show that the hyperfinite II1 factor is the smallest von Neumann

algebra containing the creation and annihilation operators on a

fermionic Fock space of countably infinite dimension. In less

technical lingo - I'm afraid I'm starting to assume you know quantum

field theory! - the hyperfinite II1 factor is the right algebra of

observables for a free quantum field theory with only fermions. For

bosons, you want the type Iinfinity factor. There is more than one

type IIinfinity factor, but again there is only one that is

hyperfinite. You can get this by tensoring the type Iinfinity

factor and the hyperfinite II1 factor. Physically, this means

that the hyperfinite IIinfinity factor is the right algebra of

observables for a free quantum field theory with both bosons and

fermions.

The most mysterious factors are those of type III. These can be

simply defined as "none of the above"! Equivalently, they are factors

for which any nonzero trace takes values in {0,infinity}. In a type

III factor, all projections other than 0 have infinite trace. In

other words, the trace is a useless concept for these guys. As far as

I'm concerned, the easiest way to construct a type III factor uses

physics. Now, I said that free quantum field theories had different

kinds of type I or type II factors as their algebras of observables.

This is true if you consider the algebra of all observables. However,

if you consider a free quantum field theory on (say) Minkowski

spacetime, and look only at the observables that you can cook from

the field operators on some bounded open set, you get a subalgebra of

observables which turns out to be a type III factor! In fact, this

isn't just true for free field theories. According to a theorem of

axiomatic quantum field theory, pretty much all the usual field

theories on Minkowski spacetime have type III factors as their

algebras of "local observables" - observables that can be measured in

a bounded open set. ...". ]

... the environment ...[is]... a joint Hilbert space in

which this operator algebra sits in different positions ... if

these relative positions are defined in an appropriate way in

terms of modular operator algebra concepts (modular inclusions and

intersections with a joint vacuum), then the existence of a

Poincare (or conformal) spacetime symmetry group and of a net of

local algebras (generated from the action of these symmetries on

the monads) are consequences. ...

A recently solved interesting problem of QFT which required a

conceptual insight beyond the standard setting is the quantum

adaptation of Einstein's local covariance principle to QFT in

curved spacetime. The reason why it took such a long time to

understand this issue is that the local (patch-wise) isometric

diffeomorphisms of the classical theory have no straightforward

implementation on the level of quantum states (as compared to the

unitarily implemented standard global spacetime symmetries as

Poincare invariance of the vacuum state in Minkowski spacetime).

The standard formalism for expectation values based on Lagrangian

action functional (or any other quantization formalism) does not

separate states from operators. ... after the algebraic approach

led to such a separation one learned how to ... formulate quantum

local covariance ...[using]... its algebraic functorial

formulation in terms of a functor which relates a category of

causal manifolds with a category of certain algebras, the old

problem one had with states became clear: states are dual to

algebras.

When one dualizes the algebraic statement one finds that only

foleii of states are invariant, the quantum local covariance does

not leave them individually unchanged. The upshot of these

investigations is a new way of looking at QFT: instead of

considering quantum fields on prescibed Lorentzian causally

complete (globally hyperbolic) manifolds, a field theory

model in the new setting is a functor between all causally

complete manifolds and an operator algebraic category (e.g.

the Weyl algebra, the CAR algebra,....).

... the ... quantum version of Einstein's local covariance

... does not support the naive zero point energy counting

arguments as in ... S. Weinberg, Rev. Mod. Phys. 61, (1989) 1 ...

which treat the vacuum as a relativistic quantum mechanical level

system. These arguments have been uncritical used by many

particle physicists ... In a very interesting paper Hollands and

Wald ...[

gr-qc/0405082 ]... show that the local covariance setting

of QFT contradicts such relativistic quantum mechanical picture of

filling momentum space levels ... which is in harmony with the

idea the momentum space (Fourier transform) only acquires its

physical interpretation through covariant localization and not

the other way around. Unfortunately the incorrect idea that QFT is

some sort of relativistic quantum mechanics is extremely

widespread, so that their arguments probably will not get the

attention which they deserve.

Needless to mention that generically curved spacetime reference

states which replace the Minkowski vacuum do lead to nonvanishing

expectation of the correctly (in agreement with the local

covariance principle) defined stress-energy tensor. There is

however a new coupling parameter involving the curvature and

within a curved spacetime setting one has to make assumptions

about its numerical strength. Hollands and Wald show that the

problem of a theory based on a energy-stress tensor quantized

according to the requirement of local covariance does not lead to

such gigantic values for the cosmological constant. ...".

Further, Schroer goes on to say:

"... The string-theorists "only game in town" claim is

based on the belief that the main content of QFT is already

known. But if a theory allows for such a radically different

conceptual setting as I have indicated ... it is quite far from

having reached its closure. It rather seems to call for another

post-renormalization revolutionary step before it can reach

its final form. ...

[ Such a step could be provided by my D4-D5-E6-E7-E8

VoDou Physics Model based on a generalized hyperfinite II1 von

Neumann algebra factor whose basic building block is the real

Clifford algebra Cl(8). ]

... The crisis in particle physics ... finds its most

visible outing in the hegemony of string theory ... As long as

some leading physicists, including Nobel prize winners, are

failing to play their natural role as critical observers (in

contrast to their more critical predecessors as Pauli who kept

particle physics in a healthy rational state), the present

situation will continue and may even deteriorate. ...

There is a related sociological problem. If an idea which

promoted the careers of many physicists is kept alive for such a

long time it becomes immune against criticism. I think nobody

at this late point would seriously expect that somebody who

invested more than 3 decades into a theory which led to tens of

thousands of publications but failed to make contact with real

physics will come up and say "sorry folks this was it"? ...

With a large number of chairs at theoretical physics

departments worldwide being occupied by string theorists I do not

see much hope. ... the chance for a radical change of direction

through newcomers entering particle physics will remain extremely

dim ... Nowadays somebody who has the capabilities and the

guts to resist the lure of the string hegemon in pursuit of his

own original ideas will run a high risk to see the end of his

academic career with no old-fashioned patent office around

which could serve as a temporary shelter. ...".

In the

.mov version of Atiyah's 24 Oct 2005 KITP talk Atiyah says (at

about 1:12:24) that his class of models is based on "… the past

history of a particle moving as a real particle …", which seems

to me to be the past world-line of the particle. An audience member

describes to Atiyah (at about 1:05:02):

"… a common thread between the class of models you

are suggesting, Connes class of models, and some unsolved problems

in string theory.

So, one simple way to think about the class of models you are

talking about is just to do a power series expansion of x(t-r) in

t and … the higher derivatives of t so then you have an

infinite order differential equation.

Similarly, quantum field theory on a noncommutative spacetime

can be expressed in terms of a star product which is an

exponential of derivatives and therefore is also in some sense a

differential equation with an infinite number of derivatives

and

the best nonperturbative formulation of string theory we have

is string field theory which is expressed in terms of Witten's

star product on strings which is also expressed in terms of some

exponential of derivatives, but which we don't understand how to

grapple with as well …".

It seems to me that a natural physical interpretation of that

"common thread" is that strings should be interpreted as world-lines,

NOT as individual particles or precursors of individual

particles.

However, Atiyah himself does not agree. He

said to me by e-mail "Yes my idea is to make things depend on

the past world line of the particle. Your comment on infinite

numbers wf derivatives is pertinent but does not really help.

No, I do not think world lines are strings. I should emphasise

that my ideas are very tentative and evolving all the time.".

Quantum Ranger, in a comment on Peter

Woit's blog, asked "… what happens to the missing

"planck-memory", it seems to be forever evolving "backwards", as for

sure, even the Planck-memory has to been formated from a previous

"past"? …".

I agree that is a good question, and it is also something that

nagged in the back of my mind (in the form of why should the memory /

past world-line be cut off at the Planck scale).

If there were no past time cutoff, then the basic entity would be

the entire (back to the big bang?) past history world-line of each

particle. Maybe such a model would be like that of Andrew Gray, who

said in quant-ph/9712037

(in the abstract) "… probabilities are … assigned to entire

fine-grained histories. The formulation is fully relativistic and

applicable to multi-particle systems. It shall be shown that this new

formulation makes the same experimental predictions as quantum field

theory …".

The same Andrew Gray proposed a "Quantum Time Machine" in

version 1 of quant-ph/9804008v1,

but he withdrew that proposal on 8 Aug 2004, the same day that he

posted version 2 of quant-ph/9712037.

Therefore, it seems to me that although Andrew Gray felt his "Quantum

Time Machine" was flawed, he still feels that his formulation of

quantum theory in terms of "entire fine-grained histories", which

sounds to me a lot like Atiyah's model without the Planck-scale

cutoff, is valid.

I wonder whether Atiyah knows of Gray's model, and, if so,

how he (Atiyah) thinks it compares with his (Atiyah's) model.

Peter Woit said in his blog: "… Both Atiyah and Witten are

extremely quick on their feet. … Raoul Bott, who had just walked

away from Atiyah and Witten, shaking his head … told me he found

listening to the two of them "scary" since they were so much quicker

than he was. Bott is a great mathematician also, but one who has to

think everything through slowly and carefully to understand it, quite

different than Atiyah or Witten. …".

Peter Woit's characterization might be that Atiyah is a Hare and

Bott is a Tortoise, yet working together they produced wonderful

results. In an

interview Bott described his work with Atiyah, saying: "… In

most of my papers with Atiyah he would write the final drafts and his

tendency was to make them more abstract. …".

Bott went on to say: "… I like the old way of presenting

things with an example that gives away the secret of the proof rather

than dazzling the audience. … on the whole I like the problems

to be concrete. I'm a bit of an engineer. For instance, in topology

early on the questions were very concrete - we wanted to find a

number! …".

As to physics and physicists, Bott made an observation about the

Princeton IAS under Oppenheimer: "… Oppenheimer had taken

over, and he was very dominant in the physics community. He had a

seminar that every physicist went to. We mathematicians always

thought they ran off like sheep, for we would pick and choose

our seminars! …". Maybe superstring theory under Witten and

Gross is a the contemporary manifestation of physicists' sheep-like

behaviour.

Bott, in his interview at

http://www.ams.org/notices/200104/fea-bott.pdf , said "… the

start of my long and wonderful collaboration with Michael Atiyah. We

first of all gave a new proof of the periodicity theorem which fitted

into the K-theory framework … Then Grothendieck, in the purely

algebraic context, gave a … proof …[of]… the

index theorem … using his K-theory in the formal, algebraic way.

… Before, we had taken complex analysis or algebraic geometry as

a given, so that the differential operator was hidden … here,

suddenly the topological twisting of the differential operator came

into the equation. Of course, Atiyah and Singer immediately realized

that this twisting is measured with the homotopy groups of the

classical groups, by the so-called symbol. Eventually the whole

development of index theory fitted the periodicity theorem into the

subject as an integral part. Atiyah very rightly chose Singer to

collaborate on this project. …".

In their book Spin Geometry (Princeton 1989 at page 277), Lawson

and Michelsohn said: "… In 1982, E. Witten found a different

approach … through consideration of symplectic geometry and

supersymmetry. … he outlined a proof of the index theorem for

the Atiyah-Singer operator … however … none of these

methods [including Witten's] applies to prove the index

theorem for families or the Cl_k - index Theorem (in their strong

forms). These theorems in general involve torsion elements in

K-theory which are not detectable by cohomological means.

…".

In his book Introduction to Superstrings and M-theory (Second

Edition, Springer 1999, 1988 at page 338), Michio Kaku said: "…

new developments in supersymmetry have now made it possible to prove

the Atiyah-Singer index theorem from a simple Lagrangian.

Traditionally, the proof of the Atiyah-Singer theorem has been

inaccessible to most physicists because of the intricacies of the

mathematical formulation. …".

Reading those excerpts in sequence leads me to think that a reason

that superstring physicists are so attached to supersymmetry is that

it is only through Witten's supersymmetric approach that they can

understand the Atiyah-Singer index theorem.

However, by restricting themselves to the Witten supersymmetric

construction, the supersymmetry physics people are cutting themselves

off from possibly very fruitful avenues of constructing new and

possibly realistic physics models.

For instance, Lawson and Michelsohn, at page 270 of their book

cited above, said [I have omitted some tildes etc from notation

due to ASCII limitations]: "… Given a real operator …

in the basic case, no information is lost under complexification.

This is not true, however, if one passes to the index theorem for

families. The index of a family of real operators takes its value in

the group KO(A), and .. for example … KO(Sn) = Z2 for n = 1 (mod

8) but K(Sn) = {0} in these dimensions. For this reason Atiyah and

Singer established a separate index theorem for families of real

operators. It is a more subtle and profound result … the

appropriate theory is not KO-theory … It is the more general

KR-theory …".

If Kaku's assessment of physicists' inability to understand a

KR-theoretical index theorem is correct, then I share Peter Woit's

sense of loss if Atiyah is not now "working on the relation between

K-theory and physics".

MacDowell-Mansouri Gravity

According to Freund in chapter 21 of his book Supersymmetry (Cambridge 1986)

where chapter 21 is a NON-SUPERSYMMETRY chapter leading up

to a supergravity description in the following chapter 22:

"... Einstein gravity as a gauge theory ...

Whether the gauge group be the Poincare or the [anti-] de-Sitter group,

we expect a set of gauge fields w^ab_u for the Lorentz group

and a further set e^a_u for the translations, ...

Everybody knows though,

that Einstein's theory contains but one spin two field,

originally chosen by Einstein as g_uv = e^a_u e^b_v n_ab

(n_ab = Minkowski metric).

What happened to the w^ab_u ?

The field equations obtained from the Hilbert-Einstein action

by varying the w^ab_u are algebraic in the w^ab_u ... permitting

us to express the w^ab_u in terms of the e^a_u ...".

The w do not propagate ...

... We start from the four-dimensional de-Sitter algebra

...so(3,2).

Technically this is the anti-de-Sitter algebra

... We envision space-time as a four-dimensional manifold M.

At each point of M we have a copy of SO(3,2) (a fibre ...) ...

and we introduce the gauge potentials (the connection) h^A_mu(x)

A = 1,..., 10 , mu = 1,...,4. Here x are local coordinates on M.

From these potentials h^A_mu we calculate the field-strengths

(curvature components) [let @ denote partial derivative]

R^A_munu = @_mu h^A_nu - @_nu h^A_mu + f^A_BC h^B_mu h^C_nu

...[where]... the structure constants f^C_AB ...[are for]...

the anti-de-Sitter algebra ....

We now wish to write down the action S as an integral over

the four-manifold M ... S(Q) = INTEGRAL_M R^A /\ R^B Q_AB

where Q_AB are constants ... to be chosen ... we require

... the invariance of S(Q) under local Lorentz transformations

... the invariance of S(Q) under space inversions ...

...[ AFTER A LOT OF ALGEBRA THAT I WON'T TYPE HERE ]...

we shall see ...[that]... the action becomes invariant under

all local [anti]de-Sitter transformations ...[and]...

we recognize ...

the familiar Hilbert-Einstein action with cosmological term

in vierbein notation ...

Variation of the vierbein leads to the Einstein equations with

cosmological term.

Variation of the spin-connection ... in turn ... yield the

torsionless Christoffel connection ... the torsion components

... now vanish. So at this level full sp(4) invariance has

been checked.

... Were it not for the assumed space-inversion invariance ...

we could have had a parity violating gravity. ...

Unlike Einstein's theory ...[MacDowell-Mansouri].... does not

require Riemannian invertibility of the metric. ... the

solution has torsion ... produced by an interference between

parity violating and parity conserving amplitudes.

Parity violation and torsion go hand-in-hand. Independently

of any more realistic parity violating solution of the

gravity equations this raises the cosmological question

whether the universe as a whole is in a space-inversion

symmetric configuration. ...".

Ark Jadczyk / John Gonsowski Physics

Outline

Ark Jadczyk physics model discussion - February 2006

based on John Gonsowski Signs of the Times Forum

Date: Sun, 26 Feb 2006 20:54:49 -0500

To: lark1@quantumfuture.net

From: Tony Smith <f75m17h@mindspring.com>

Subject: Signs of Times Forum

Ark, thanks for your message and reference to the Signs of the Times

Forum discussion about some aspects of my web site.

I am a bit late and slow in replying because I have just returned

from a trip to Connecticut (a final illness and funeral, so somewhat

sad and stressful).

A lot of points have been raised in your discussion with John G,

and it is easier for me to deal with them by e-mail to you

and let you post it (or any part of it that you find interesting)

if you want to do so. Feel free to delete stuff you find uninteresting.

Here are some of the points that I see, and some comments in

which I will ignore signature issues to try to keep this short:

As to fundamental structure as it emerges from Clifford algebra,

see my web page at

http://www.valdostamuseum.org/hamsmith/ClifTensorGeom.html

Roughly,

if the universe is describable by a union of all possible

(some very large) real Clifford algebras,

and

then you use real periodicity to factor each Clifford algebra into a

tensor product of Cl(8) tensor algebras

then

that union (in the limit) is a generalization of the well-known

von Neumann hyperfinite II1 factor, where the generalization

is the replacement of the complex 2x2 structures by

the real Cl(8) Clifford algebra.

Then,

if each Cl(8) in each tensor product Clifford algebra "chain"

describes the physics of a small neighborhood in our universe,

each "chain" can (and probably will) "fold up" in such a way that

each Cl(8) neighborhood is connected to other Cl(8) neighborhoods

so that they collectively form a macroscopic region of an 8-dim

spacetime that has a Planck-scale lattice structure.

In this sense, an 8-dim spacetime "emerges" from a primordial

giant "union" or collection of all possible real Clifford algebras.

The 8-dim spacetime has a natural continuum approximation whose

structure is M4 x CP2 with physics somewhat related to the

Kaluza-Klein model of Batakis as described on my web page at

http://www.valdostamuseum.org/hamsmith/YamawakiCP2KKNJL.html#Batakis

This web page also describes in some detail how, in this model,

Nambu-Jona-Lasinio type T-quark condensates, and their connection

to Higgs and Vacua, explain the three peaks of T-quark events that

have been observed by Fermilab (two of those three peaks have been

ignored by Fermilab's official consensus publications, but they are

there nontheless, and my web site has some discussions about that).

As to where gravity comes from in this picture,

the Cl(8) bivector Lie algebra Spin(8) has a

subalgebra the conformal Spin(2,4) = SU(2,2)

which produces gravity by the MacDowell-Mansouri mechanism

http://www.valdostamuseum.org/hamsmith/cnfGrHg.html#CnfMMgr

Since the conformal group has the anti-deSitter/Poincare group as a

subgroup, it is possible that some regions in our universe

(such as our solar system inside the orbit of Uranus) see

gravity in a non-expanding Poincare phase like the fixed

pennies on an expanding balloon,

while

some regions beyond Uranus see gravity in an expanding conformal phase

like the expanding surface of a balloon.

The picture is motivated by, and the conformal phase is similar to,

Segal's conformal gravity

http://www.valdostamuseum.org/hamsmith/SegalConf.html

and it is consistent with (and effectively explains) both

the Pioneer anomaly and the unusual rotational axis of Uranus.

http://www.valdostamuseum.org/hamsmith/SegalConf3.html#pioneerexpmt

and it also gives Dark Energy : Dark Matter : Ordinary Matter ratios

that are in agreement with the WMAP observations

http://www.valdostamuseum.org/hamsmith/cosconsensus.html#grvphtncc

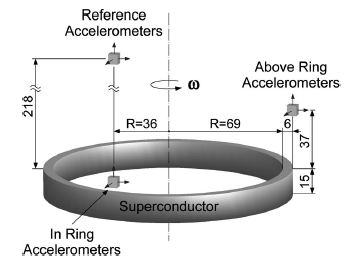

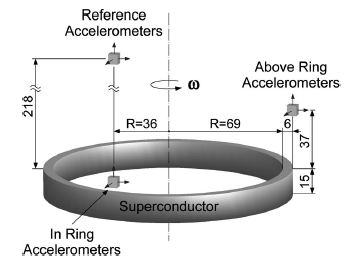

Possible exploitation of Dark Energy using coherent arrays of small

Josephson Junctions is discussed at

http://www.valdostamuseum.org/hamsmith/coscongraviton.html#QEDDE

It is interesting that such coherent Josephson Junction arrays

may be related to coherent tubulin arrays in human consciousness

http://www.valdostamuseum.org/hamsmith/QuanCon.html

and that there might be interesting resonant connections among

such related structures

http://www.valdostamuseum.org/hamsmith/QuantumMind2003.html

I will note that the link immediately above is to a version of

a paper that was barred from the Cornell arXiv due to their

blacklisting me. Note that they have allowed posting by others

on the subject of quantum consciousness, for example, papers

by Fred Thaheld at quant-ph/0509042 and physics/0601060 and

others.

As to why strings might be relevant, see my construction of a

physically realistic string theory model at

http://cdsweb.cern.ch/search.py?recid=730325&ln=en

Due to being blacklisted by the Cornell arXiv, it is not there,

but I put it up on the above site at CERN shortly before

CERN discontinued its EXT series on its preprint server.

As to information and the initial "big bang", I generally

subscribe to the approach of Paula Zizzi as I discuss at

http://www.valdostamuseum.org/hamsmith/cosm.html#QCdSInfl

There are many technical issues, some of which need more work,

but in a short message there is no way that I can deal with

them all. However,

the end result is that I have constructed a physics model

that is in reasonably good (in my opinion) agreement

with all experimental observations. See my web page at

http://www.valdostamuseum.org/hamsmith/2002SESAPS.html

and, for neutrino masses and mixing angles,

http://www.valdostamuseum.org/hamsmith/snucalc.html#asno

I wish that I could say that I would happily discuss by

e-mail and in blogs etc all questions in detail,

but I am only one person with a limited time in life,

and there are MANY very detailed questions, and

I have no help from institutional affiliation or school of coworkers,

so for further details I refer any interested party to the above

links and to all other material on my web site.

I know that there may exist errors in detail,

and that my terminology may not be precisely what everyone else

would like,

and that some parts of my web site etc were written years

before others, and there may be some inconsistencies due

to evolution of my thinking,

but I believe that any inconsistencies and any errors in detail and

terminology are correctable and that

as an overall structure the above model gives a substantially accurate

description of nature and some interesting avenues for future exploration

(particularly with respect to Dark Energy and consciousness).

Tony

Date: Mon, 27 Feb 2006 11:14:51 -0500

To: lark1@quantumfuture.net

From: Tony Smith <f75m17h@mindspring.com>

Subject: Lagrangian

Ark, on the Signs of the Times Forum,

you ask why I use the Lagrangian that I use.

My Lagrangian for 4-dim spacetime with CP2 internal symmetry space

is inherited from a Lagrangian over 8-dim spacetime that comes

from the Cl(8) Clifford Algebra.

The Cl(8) Clifford algebra structures give Lagrangian components

as follows:

8-dim vector part -> 8-dim base manifold over which the Lagrangian

density is integrated

28-dim bivector part -> gauge boson curvature term of Lagrangian density

16-dim spinor part -> 8-dim fermion spinor particle and

8-dim fermion spinor antiparticle part of

Lagrangian density

Further details and the physical interpretation of the other parts

of Cl(8) are given on my web site, as are details of how the

4-dim spacetime Lagrangian appears when you break the full octonionic

symmetry of the 8-dim spacetime by introducing a preferred

quaternionic subspace (it can be thought of as "freezing out" at

lower energies such as where our experiments are done).

I should note that the (not 1 to 1) supersymmetry between

the 28 gauge bosons and the 8 fermion particle types

may be useful in cancellations for ultraviolet finiteness for

the 8-dim spacetime, which is useful for the 4-dim spacetime Lagrangian

of the corresponding low-energy theory,

and

that the 3 generations of fermions for 4-dim spacetime come

from the structure of the dimensional reduction from 8-dim spacetime

due to freezing out of a preferred quaternionic subspace.

(The 8-dim spacetime fermions have only one generation.)

As I would hope might be clear from the above,

the Lagrangian is NOT just made up ad hoc,

it is a natural construction based on the structure of Cl(8).

Tony

Date: Mon, 27 Feb 2006 15:16:48 -0500

To: lark1@quantumfuture.net

From: Tony Smith <f75m17h@mindspring.com>

Subject: manifold and density

Ark,you ask "... What is your 8-dimensional manifold,

and

how you define your "Lagrangian density"? ...".

The 8-dim manifold is S1xS7 which is the Shilov boundary of

the bounded complex domain of type IV(8)

that corresponds to

the type BD(I) rank 2 symmetric space Spin(10) / Spin(8)xU(1)

After a particular quaternionic structure is frozen out,

the resulting Kaluza-Klein type space has two parts:

compact internal symmetry space CP2 = SU(3) / U(2)

and

4-dim spacetime S1xS3 which is the Shilov boundary of

the bounded complex domain of type IV(4)

that corresponds to

the type BD(I) rank 2 symmetric space Spin(6) / Spin(4)xU(1)

Of course, I should say that your work, including papers with Coquereaux,

have been very important to me in trying to understand such

Lie sphere geometry structures.

The complex domain of which the 4-real-dim spacetime is the Shilov boundary

has physical significance in determining the relative strengths of

the forces (gravity, color, weak, electrmagnetic)

using techniques motivated by (but not identical to) those of

Armand Wyler in the 1960s-70s with respect to calculation of

the electromagnetic fine structure constant.

If you are interested in Wyler's work, you can go to my web site.

The unpublished papers that he wrote while at the Princeton IAS

under Freeman Dyson's directorship are both in one pdf file at

http://www.valdostamuseum.org/hamsmith/WylerIAS.pdf

It is about 17 MB in size, and is a long download for slow dialup.

Details about the Lagrangian (sorry for the notation which is

done due to limitations of html when I wrote it up) are at

http://www.valdostamuseum.org/hamsmith/2002SESAPS.html#D4D5E6Lagrangian

You can see an ealier version (not up to date in all technical details,

but generally similar) in more familiar LaTeX notation in a paper

that I put on the xxx.lanl.gov archive before it became the Cornell arXiv

and before I was blacklisted:

http://xxx.lanl.gov/abs/hep-ph/9501252

A very important unconventional technique is application

of the work of Meinhard Mayer (who used the book of Kobayashi

and Nomizu, volume 1) on dimensional reduction of gauge models.

Here are references:

Mayer, Hadronic Journal 4 (1981) 108-152,

and also articles in New Developments in Mathematical Physics,

20th Universitatswochen fur Kernphysik in Schladming

in February 1981 (ed. by Mitter and Pittner),

Springer-Verlag 1981, which articles are:

A Brief Introduction to the Geometry of Gauge Fields (written with Trautman);

The Geometry of Symmetry Breaking in Gauge Theories; and

Geometric Aspects of Quantized Gauge Theories.

If it were more widely known, maybe my work would be more acceptable

to the physics community, but it is not so widely known (of course,

people like Mayer, Trautman, et al know it well, but they are not

a big proportion of the physics community).

Tony

PS - IIRC (if I recall correctly, which may not be the case as my

memory gets more questionable as I age),

when I published my early work (including the Mayer mechanism

stuff) in the 1980s in the International Journal of Theoretical Physics, \I

think that Mayer may have expressed an opinion about it,

saying something like "... if even half of what this paper says is true,

it is a very important paper ...".

Unfortunately for me, he may be the only physicist who ever said such

a thing, while a lot of physicists obviously dislike my work and/or me

(since I am blacklisted).

Date: Mon, 27 Feb 2006 20:28:51 -0500

To: lark1@quantumfuture.net